The energy challenge of powering AI chips

Information processing isn’t the only thing artificial intelligence amplifies, it also intensifies energy consumption and heat generation. To keep pace and keep cool, data centers will need massive upgrades, creating strong structural drivers and potentially recession-proof revenue growth for companies supplying energy-efficient equipment and energy management systems.

概要

- The chips powering AI are hot and power-hungry

- Current data centers are ill-equipped and in need of a revamp

- Upgrades will benefit the smart energy themes

The pace of artificial intelligence (AI) software adoption is one of the fastest adoption curves markets have ever seen. The large language models (LLM) used by ChatGPT and similar AI bots to generate humanlike conversations are just one of many new AI applications that rely on ‘parallel computing’, the term used to describe the massive computational work performed by networks of chips that carry out many calculations or processes simultaneously.

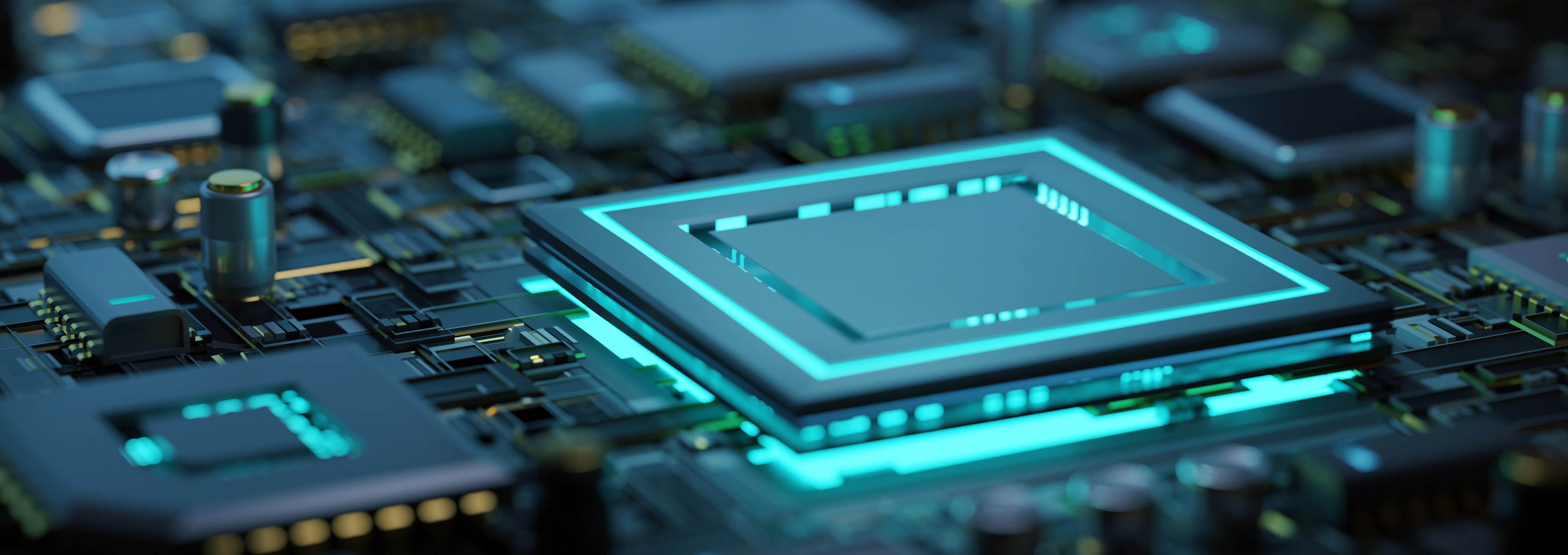

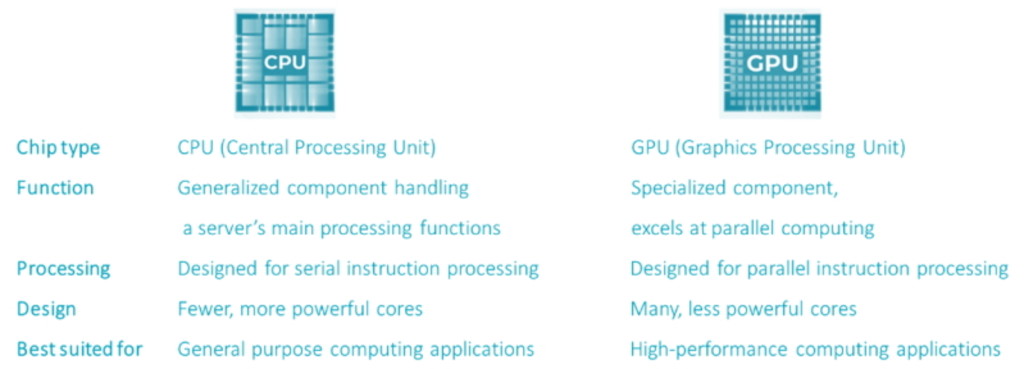

At the core of the AI infrastructure are GPUs (graphic processing units) that excel at the type of specialized, high-performance parallel computing work required by AI. All that processing power also results in higher energy inputs that generate more heat output compared to their CPU (central processing units) counterparts used in PCs. See Figure 1.

Figure 1 - Core comparison – CPUs vs GPUs

Source: GPU vs CPU – Difference Between Processing Units – AWS (amazon.com)

High-end GPUs are about four times more power dense than CPUs. This constitutes significant new problems for the data center planning as the originally calculated power supply is now only 25% of what is needed to run modern AI data centers. Even the cutting-edge, hyperscaler data centers used by Amazon, Microsoft and Alphabet for cloud-based computing are still CPU-driven. To illustrate, Nvidia’s currently supplied A100 AI chip has a constant power consumption of roughly 400W per chip, whereas the power intake of its latest microchip, the H100, nearly doubles that – consuming 700W, similar to a microwave. If a full hyperscaler data center with an average of one million servers replaced its current CPU servers with these types of GPUs, the power needed would increase 4-5 times (1500MW) – equivalent to a nuclear power station!

GPUs are around four times more power-dense than CPUs

釋放主題的投資潛能

25年多來,荷寶一直是主題策略建構的先驅者。

This increase in power density means that these chips also generate significantly more heat. Consequently, the cooling systems also have to become more powerful. Power and cooling changes of these magnitudes will require totally new designs for future AI-driven data centers. This creates a huge demand-supply imbalance on the underlying chip and data center infrastructure. Given the time it takes to build data centers, industry experts project that we are in the first innings of a decade-long modernization of data centers to make them more intelligent.

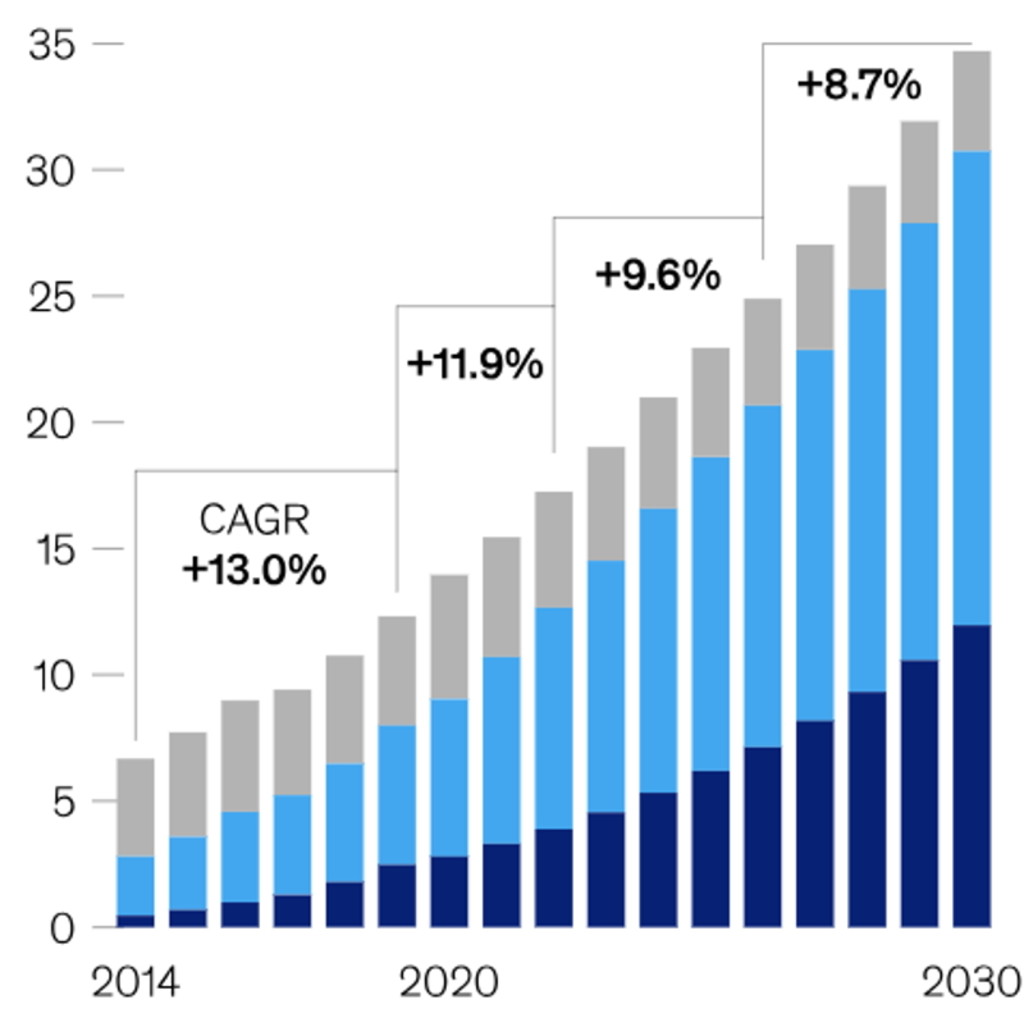

Figure 2 - Growth in power consumption of US data centers (gigawatts)

Includes power consumption for storage, servers and networks. Gray indicates energy consumption of enterprise data centers with vertically, light blue ‘co-location’ companies that rent and manage IT data center facilities on behalf of companies, dark blue indicates hyperscaler data centers.

Source: McKinsey & Company, Investing in the rising data center economy, 2023.

Operation overhaul – Equipping data centers for AI needs

Structural changes of this magnitude will lead to massive upgrades of not only chips and servers but also the electric infrastructure that supplies them with the power to operate.

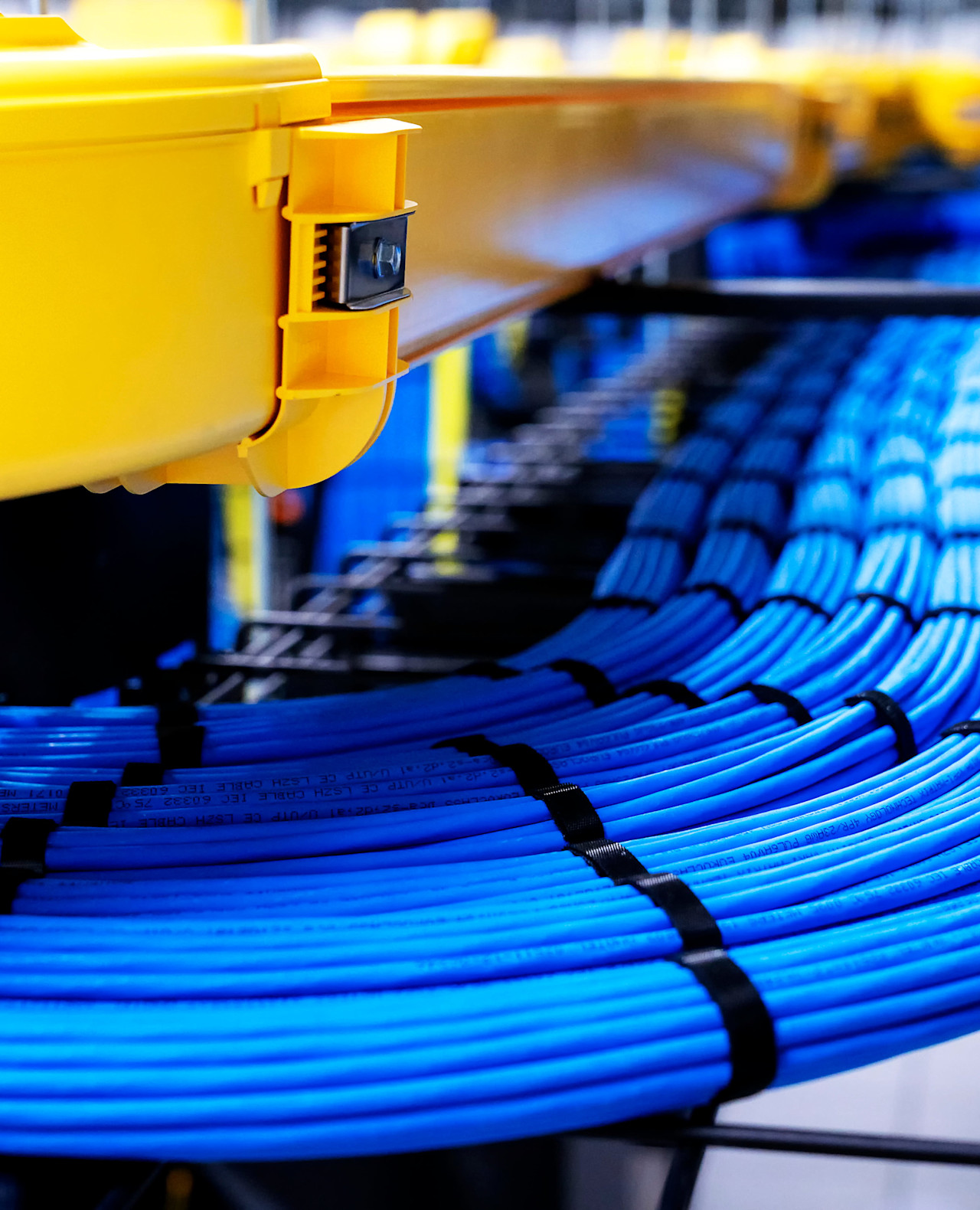

The laws of physics dictate that increasing power supply means increasing electric voltage and/or current (Power = voltage x current). Increasing voltage is the more viable option.1 Accordingly, the industry is working on increasing the voltage, which requires redesigning many standard components that were defined in the early era of computers and servers, when power densities were relatively low (2-3kW per rack). This means new configurations for power cords, power distribution racks and converters as they exceed the current formats (See Figure 3). On a chip level, even bigger challenges exist, as higher voltage and therefore more power for the GPU requires a full redesign of the chip power layout.

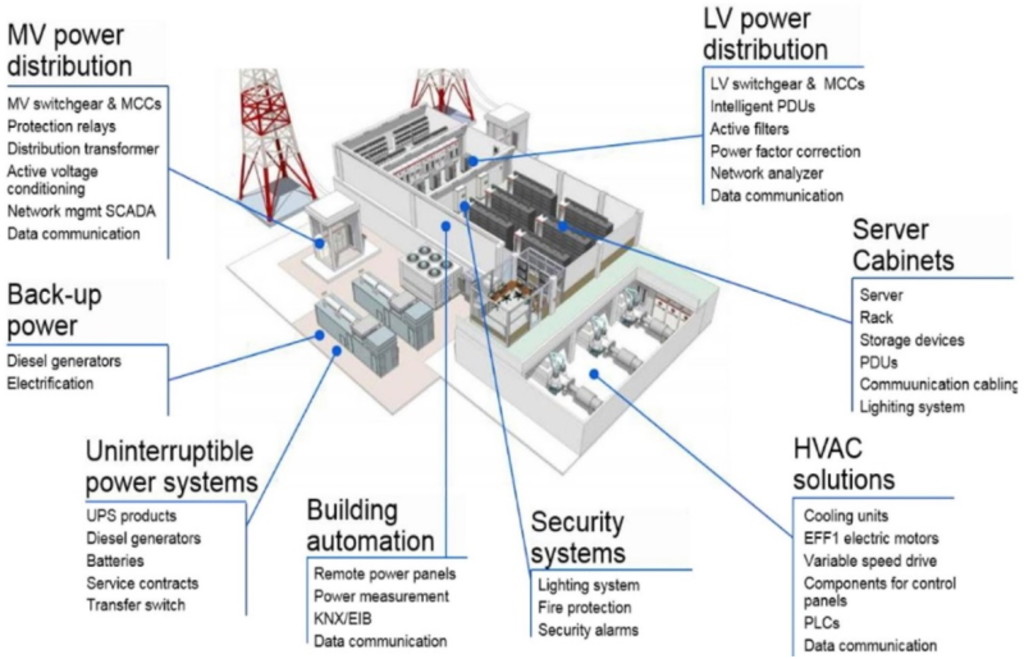

Figure 3 – More than a cable and plug – The complexity of data center electrical systems

Powering a data center involves multiple interconnected systems that must function seamlessly for optimal processing performance. LV = Low voltage devices, MV = Medium voltage devices

Source: Green Data Center Design and Management

Data center cooling is key to ensure high system performance and prevent malfunctioning. Traditional HVAC2 solutions that use air conditioning and fans to cool the air in data center server rooms are sufficient for CPUs whose server racks manage power densities between 3-30 kW, but not for GPUs whose power densities easily go beyond 40kW.3 As the latest GPU racks exceed these power levels, additional liquid cooling is (once again) at the forefront. They allow for even higher heat dissipation on a server rack or chip level because liquids have a greater capacity to capture heat per unit volume than air. Some of the biggest challenges for liquid cooling however are 1) the lack of standardized designs and components for such systems, 2) different technology options such as chip or rack cooling, and 3) high costs for pipes and measures to prevent leakage.

Our outlook on the AI revolution and its impact on data centers’ energy requirements

The AI revolution will require nothing short of a complete re-engineering of the data center infrastructure from the inside out to accommodate for the much higher energy needs of new AI technology. This will result in a strong boost in demand and investments for data centers on low-power computing applications, energy-efficient HVAC and power management solutions – all of these Big Data energy efficiency solutions are key investment areas for the smart energy strategy.

The AI revolution will require nothing short of a complete re-engineering of the data center infrastructure from the inside out

Revenues for companies supplying energy efficiency solutions to data centers are expected to show strong growth. This has also led to a re-rating in the market with these companies now trading more on the upper end of their historical range on the back of the much stronger growth outlook. Given the strong momentum and underlying structural drivers, we fundamentally really like this part of the smart energy investment universe. We also believe that this area can decouple from a potential recession, as spending on much-needed data centers and related energy efficiency solutions will not be dependent on the economic cycle.

Footnotes

1 Increasing the current requires thicker wires and costs valuable space – not a viable option for the tightly packed server rack layout of today’s data centers.

2 Heating, ventilation and air conditioning

3 According to Schneider Electric, GPU server rack power density levels average 44kW

Important information

The contents of this document have not been reviewed by the Securities and Futures Commission ("SFC") in Hong Kong. If you are in any doubt about any of the contents of this document, you should obtain independent professional advice. This document has been distributed by Robeco Hong Kong Limited (‘Robeco’). Robeco is regulated by the SFC in Hong Kong. This document has been prepared on a confidential basis solely for the recipient and is for information purposes only. Any reproduction or distribution of this documentation, in whole or in part, or the disclosure of its contents, without the prior written consent of Robeco, is prohibited. By accepting this documentation, the recipient agrees to the foregoing This document is intended to provide the reader with information on Robeco’s specific capabilities, but does not constitute a recommendation to buy or sell certain securities or investment products. Investment decisions should only be based on the relevant prospectus and on thorough financial, fiscal and legal advice. Please refer to the relevant offering documents for details including the risk factors before making any investment decisions. The contents of this document are based upon sources of information believed to be reliable. This document is not intended for distribution to or use by any person or entity in any jurisdiction or country where such distribution or use would be contrary to local law or regulation. Investment Involves risks. Historical returns are provided for illustrative purposes only and do not necessarily reflect Robeco’s expectations for the future. The value of your investments may fluctuate. Past performance is no indication of current or future performance.